|

Lorna Mills and Sally McKay

Digital Media Tree this blog's archive OVVLvverk Lorna Mills: Artworks / Persona Volare / contact Sally McKay: GIFS / cv and contact |

View current page

...more recent posts

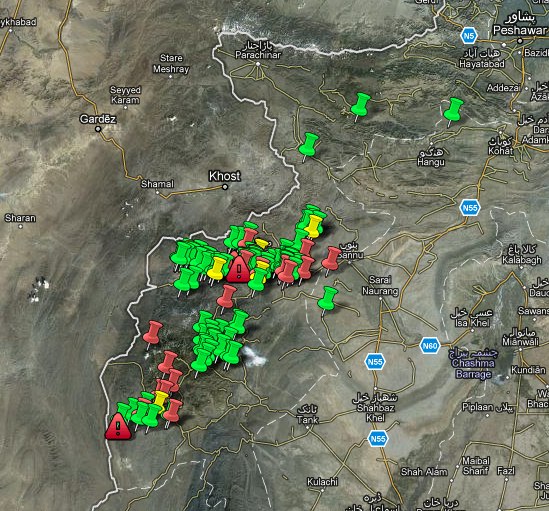

I've been playing with Cleverbot lately. It started when I watched the CBC documentary, Remote Control War (available online). Various experts discussed the inevitability of military deployment of autonomous robots. Right now the US is menacing people on the ground in Pakistan with remote control drones. It's like being buzzed by great big annoying insects that you can't reach with a flyswatter...except they are quite likely to kill you, or your neighbour, or both. The drone's controllers are in the US, sitting at consoles, drinking Starbucks, making decisions and punching the clock. It's bad.  link Autonomous robots would maybe be even worse, as they'd just go on in there and make decisions for themselves about when to kill people and when to hold their fire. The army might soon be able to make a pitch that their robots' AI can, say, distinguish between combatants and civilians, but my understanding of AI research is that such a thing is just not possible. As it is, human's can't even do it. That's why they invented the concept collateral damage. It might be possible to have robots act reliably under controlled, laboratory conditions, but the manifold variables of real-life encounters simply can't be predicted nor programmed for ahead of time. (For more on this, see What Computers Can't Do by Hubert Dreyfus.) There will always be a big margin of error and downright randomness. Hilarious in the movies — remember ED-209? — but truly brown trowsers, brain-altering, high-stress awful if you're stuck on the receiving end. So I went to chit-chat with Cleverbot and see how pop culture AI is coming along. Remember Eliza, the artificial intelligence therapist designed in the 60s? (There's an online version.) I played with Eliza a lot in the 90s and in my opinion, Cleverbot isn't doing a much better job at sounding human than she did. Cb has some potential because it's collecting new data all the time from people who play with it. In this way it's like 20Q, which is pretty impressive. At least, the classic version, which has been played the most, is really really good. But 20Q would be easier to design than Cleverbot because there is a set formula and rigid parameters for how the questions go (20Q means 20 questions). I'd like to know more about the programming behind the syntactical structures in Cleverbot's system. It seems to have Eliza-like strategies of throwing your words back at you in the form of a question. It also throws out random stuff that other people have typed in — often funny and interesting in a totally nonsequitor-ish, Dada kind of way. It has a very poor memory, however, and can't really follow the conversation beyond a few lines. I'm not sure why it's so shallow. But it is remarkably good at grammar! Sometimes words are spelled wrong, and the content makes no sense, but it almost always responds with statements that are structurally valid. All in all, though, it does a poor job of following a train of thought or applying logic in any meaningful way. Cleverbot is fun, and it's flaws are revealing about how language works, but I don't find it convincing, and it actually make me think that info-tainment AI hasn't progressed much since Eliza days. I'm sure that DND probably has all kinds of way more sophisticated work in progress, but if the military tries to tell me that they've got an autonomous robotic system that can reliably adhere to the Geneva convention, I will not believe them. (Not that anybody calling the shots gives a shit about the Geneva convention anymore.) |